OpenAI, a leading artificial intelligence research laboratory, has unveiled its latest breakthrough in natural language processing: GPT-4o.

This revolutionary language model, with the “o” standing for “omni,” marks a significant step forward in human-computer interaction by accepting and generating a combination of text, audio, and images.

One of the most impressive features of GPT-4o is its ability to respond to audio inputs in as little as 232 milliseconds, with an average response time of 320 milliseconds.

This near-human response time sets GPT-4o apart from its predecessors and paves the way for more natural and seamless conversations between humans and AI.

In terms of performance, GPT-4o matches the text and code capabilities of GPT-4 Turbo in English while significantly improving upon non-English language processing. Additionally, GPT-4o is much faster and 50% cheaper than its predecessor in the API.

The model’s vision and audio understanding capabilities have also been greatly enhanced compared to existing models.

Unlike previous models that relied on separate pipelines for audio transcription, text processing, and audio generation, GPT-4o is a single end-to-end model that processes all inputs and outputs using the same neural network.

This unified approach allows GPT-4o to directly observe and interpret tone, multiple speakers, background noises, and express emotions through laughter and singing.

OpenAI has also made significant strides in language tokenization, with GPT-4o demonstrating impressive compression across various language families. For example, the model requires 4.4x fewer tokens for Gujarati, 3.5x fewer for Telugu, and 3.3x fewer for Tamil compared to previous models. This improved efficiency extends to a wide range of languages, including Arabic, Persian, Russian, Korean, and more.

Safety has been a key consideration in the development of GPT-4o. The model has built-in safety features across all modalities, achieved through techniques such as filtering training data and refining the model’s behavior post-training.

New safety systems have also been implemented to provide guardrails on voice outputs. Extensive evaluations and external red teaming with over 70 experts in various domains have been conducted to identify and mitigate potential risks.

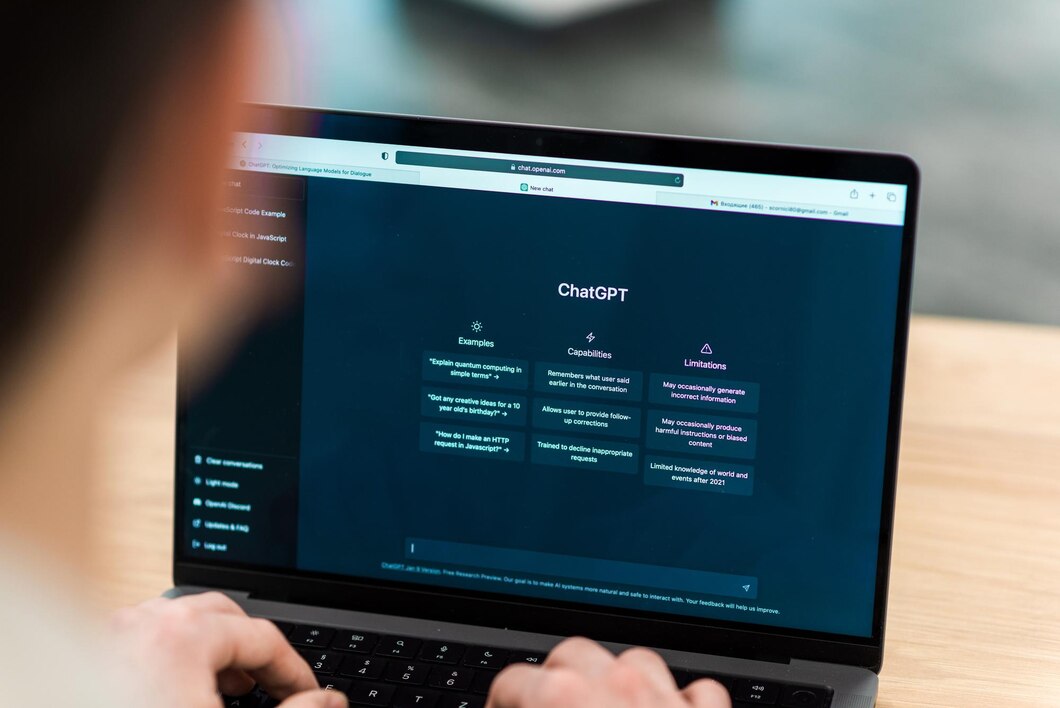

While GPT-4o represents a significant leap forward in AI language models, OpenAI acknowledges that there are still limitations to be addressed. The company encourages feedback from users to help identify tasks where GPT-4 Turbo may still outperform GPT-4o, allowing for continuous improvement of the model.

As of today, GPT-4o’s text and image capabilities are being rolled out in ChatGPT, with the model available in the free tier and to Plus users with up to 5x higher message limits. A new version of Voice Mode with GPT-4o will be introduced in alpha within ChatGPT Plus in the coming weeks.

Developers can now access GPT-4o in the API as a text and vision model, with support for audio and video capabilities planned for release to a small group of trusted partners in the near future.

The introduction of GPT-4o marks an exciting new chapter in the evolution of AI language models, offering users a more natural, efficient, and engaging way to interact with computers.

As OpenAI continues to push the boundaries of deep learning, the potential applications for this technology are vast and promising.

Thanks, I have just been looking for information about this subject for a long time and yours is the best I’ve discovered till now. However, what in regards to the bottom line? Are you certain in regards to the supply?

dignissimos eius eius velit voluptatibus voluptates voluptatem ea sequi quo et nemo aut omnis minus doloribus. maiores impedit ab reiciendis cupiditate est sunt iusto porro delectus dolorum doloribus